Crucial Role of QA Tester in Software Development

Testing for quality assurance is essential to the software development process. The QA tester assesses and validates the product while the developer creates new features and programs. Their input guarantees errors are found and fixed before being released to the general public.

Let’s look at the vital function QA tester play today. We’ll review ten major areas that QA testing affects and why developers need to use it. Gaining insight into their viewpoint can promote cooperation and result in better software.

Manual vs. Automated Testing

There are pros and cons to both manual and automated testing that are important to understand. Manual testing allows QA Tester to think creatively and explore unexpected scenarios, but it is time-consuming and repetitive tasks become prone to human error. Automated testing solves this by programmatically executing the same tests consistently, but building robust automated test suites requires significant effort and maintenance.

In reality, most mature testing programs employ a blend of both. Manual testing still has value for usability evaluations and ad-hoc exploratory testing. But core functionality and regression testing are best suited to automation. Let’s examine some examples:

Requirements Gathering and Documentation

Thorough requirements are the foundation of practical testing. But gathering and documenting them well requires careful effort. QA Tester must understand both business needs and technical capabilities. They analyze product visions, feature descriptions, and wireframes/prototypes and discuss them with stakeholders.

Some methods for requirements elicitation include interviews, workshops, surveys and prototyping sessions. Ambiguous or incomplete requirements lead to defects, so testers validate understanding—well-structured documentation formats like IEEE, ITS or structured text aid communication. Conditions should be traceable, measurable, unambiguous and modifiable as needs evolve.

Testers also identify non-functional qualities like performance, security and cross-browser compatibility. Edge cases around localization, accessibility and regulatory compliance are addressed. Requirements are prioritized and linked to test plans/cases. Documentation is version-controlled and available to all teams. With practice, testers gain expertise in best practices for conditions.

Usability and User Experience Evaluation

Thorough usability testing uncovers many issues that developers may need to be aware of. QA Tester recruit a sample of actual users matching target demographics. They observe unguided interactions through techniques like thinking-aloud protocol, task-based scenarios and heuristic evaluation.

Qualitative feedback on tasks like account creation flows, navigation structures, or form validation helps spot confusion points. Quantitative metrics include task success rates and completion times. Testers may conduct A/B tests to compare design variations. Surveys measure satisfaction and ease of use. Remote usability testing platforms now allow broad participant pools.

Findings are presented using a structured format covering affected areas, observed issues, impact severity and recommendations. Development teams prioritize fixes to address the most critical problems. Iterative usability evaluations refine the experience based on user research. This collaborative process delivers products with intuitive, solid design.

Compatibility and Platform Testing

Cross-browser compatibility remains a challenge area. Testers methodically try the application on primary desktop and mobile browsers like Chrome, Firefox, Safari, Edge, and stock Android and iOS browsers. They check for layout rendering differences, broken interactions or crashes on each one.

Operating system compatibility is also crucial. QA Tester evaluate whether the software functions as expected on Windows, macOS, Linux, Android, and iOS versions. They test on virtual machines to replicate a broad range of environments.

Other platforms involve testing web apps across devices like phones, tablets, and desktops with different screen sizes, resolutions, and aspect ratios. APIs are tested for compatibility across programming languages and frameworks, too. Exhaustive compatibility testing requires significant effort but pays off in avoiding user frustration.

Security Vulnerability Assessment

Security testing evaluates an application’s resistance to common attacks. Manual testers simulate injection flaws by inserting malicious code payloads. They probe for cross-site scripting (XSS) vulnerabilities by trying to inject JavaScript. Testing authentication involves attempts to bypass login, escalate privileges or decrypt sensitive data.

Automated scanners can detect low-hanging fruit, but dedicated security testers have advanced skills. They think like attackers to find flaws the tools may miss. Penetration testing ethically hacks live systems with testing permission. Vulnerable components are identified, and responsible disclosure policies are followed for coordination.

Security best practices include threat modelling to understand risks, secrets management, input validation, output encoding, etc. As attacks evolve, security testing requires constant learning. Mature programs have security experts who specialize in this critical area.

Performance and Load Testing

For applications with traffic peaks, performance must be rigorously tested. Load testing tools generate thousands of virtual concurrent users to simulate real-world usage spikes. QA Tester establish performance success criteria like maximum page load times and transaction response slaws.

They stress test core usage workflows while monitoring server CPU, memory, disk and network utilization. Bottlenecks are identified, and load testing helps determine optimal infrastructure configurations. Advanced tools can model complex user behaviours, geographic distributions and step-load patterns.

Performance profiling pinpoints inefficient code sections via tools like flame graphs. Caching, parallelization and other optimizations are evaluated. Mobile apps are tested over various network conditions. Performance regressions must be detected during development to avoid production issues. Mature programs conduct load testing iteratively.

Localization and Globalization Testing

As software expands globally, localization testing validates correct behaviour for all target languages and locales. Testers evaluate number and date formatting, text encoding, bidirectional display support and more. They check for any encoding or display errors when content is translated to right-to-left languages like Arabic or Hebrew.

Cultural factors are also considered, like honorific name prefixes used in some Asian languages. Test automation frameworks support localization by parameterizing text, ensuring translations don’t introduce new defects. Translated software undergoes full functional, performance and accessibility testing like the original.

Ongoing collaboration with localization teams helps address issues early. Some challenges involve supporting character sets beyond the Latin alphabet or ensuring culturally appropriate content. Attention to these details enhances the user experience everywhere a product is sold.

Accessibility Compliance Testing

Accessibility testing evaluates compliance with standards like WCAG, Section 508 and others to ensure software can be used by all. QA Tester evaluate visual elements, keyboard navigation, colour contrast, and text alternatives for non-text content. Screen readers check for proper headings, form labels and navigation order.

Captions are tested for videos along with audio descriptions. Dynamic content changes are validated as being accessible, too. Compliance scanners help identify low-hanging issues, but manual testing remains essential. Test automation frameworks are enhanced to include accessibility checks.

Developers may not consider all disability needs, so testers provide valuable expertise. They consult guidelines to validate compliance at AA or higher levels. Findings are reported with recommendations to help prioritize fixes. Continuous evaluation ensures compliance as products evolve. Accessibility is a legal requirement in many jurisdictions.

Read More: Workforce Software Eleveo: The Key to Streamlined Organizational Operations

Regression Testing

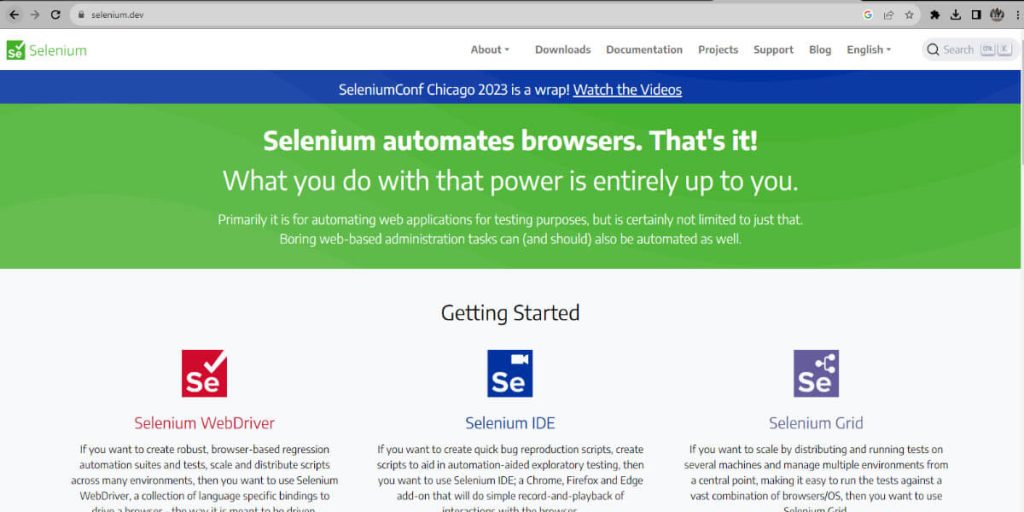

Regression testing verifies that existing functionality wasn’t accidentally broken with each new build or code change. Automated regression test suites are developed using Selenium or Cypress to simulate user flows programmatically. Test cases cover core workflows, edge cases, and browser/device combinations.

Tests are run on a CI/CD pipeline with every code commit. This allows developers to identify regressions immediately before they impact other teams. Flaky, brittle or slow tests are refactored so the suite remains maintainable as the codebase grows. Selective re-runs focus only on relevant tests when changes are limited in scope.

Manual exploratory testing still adds value post-deployment to catch any subtle regressions. Testers also help develop new automated test cases from bugs found. Mature programs apply techniques like contract testing, interface testing and component integration testing for thorough coverage. Continuous regression ensures a long-term sustainable testing practice.

Defect Tracking and Reporting

Bug tracking systems provide structure for testers to document each finding methodically and consistently. Clear templates capture all relevant details to aid fast resolution. These include reproduction steps, expected vs. actual results, screenshots or videos, severity, affected platforms and more.

Well-written reports save developer troubleshooting time. Automated parsing of natural language test cases also helps generate structured bug reports. QA Tester assign issues to the appropriate team for tracking to completion.

Communication is vital – developers are updated on any blocking bugs. Testers validate fixes by re-running impacted tests before closing issues. Feedback loops ensure quality throughout. Regular reporting measures testing progress and helps stakeholders understand outstanding risks. Mature programs establish standard defect life cycles integrated with development.

In summary, thorough testing is a process that requires diligent effort across every stage of the development lifecycle. But with the right people, tools, techniques and collaboration – it delivers immense value by helping to release higher quality, more reliable software. Testing truly is a team sport.